Overview

In current big and long-running IT projects one of the main problems is the complexity of the system that makes it hard to modify in the future. Changing business circumstances and requirements is the only one constant fact in the IT world. There’s a number of projects out there that got too expensive to maintain. “Let’s rewrite this project from scratch and kill this old monster” – does it sounds familiar?

How can we build systems that will be simple to understand and change in the long run? This article mentions what are the main factors making applications difficult or easy to change and proposes an architecture that supports long term maintainability.

Main issues with maintaining large applications

- A high level of coupling causes unpredictable side effects, increases the scope of changes and the risk of introducing new defects with changes.

- Difficulty in understanding – every change requires extensive (time-consuming) analysis. Additionally onboarding new people into the project requires significant time.

- Difficulty in testing, high coupling leads to a mocking-hell.

Loosely coupled architecture

- Minimal code level dependencies

- Each implementation module is easily testable

- Each implementation module can be replaced without affecting other modules

Modules

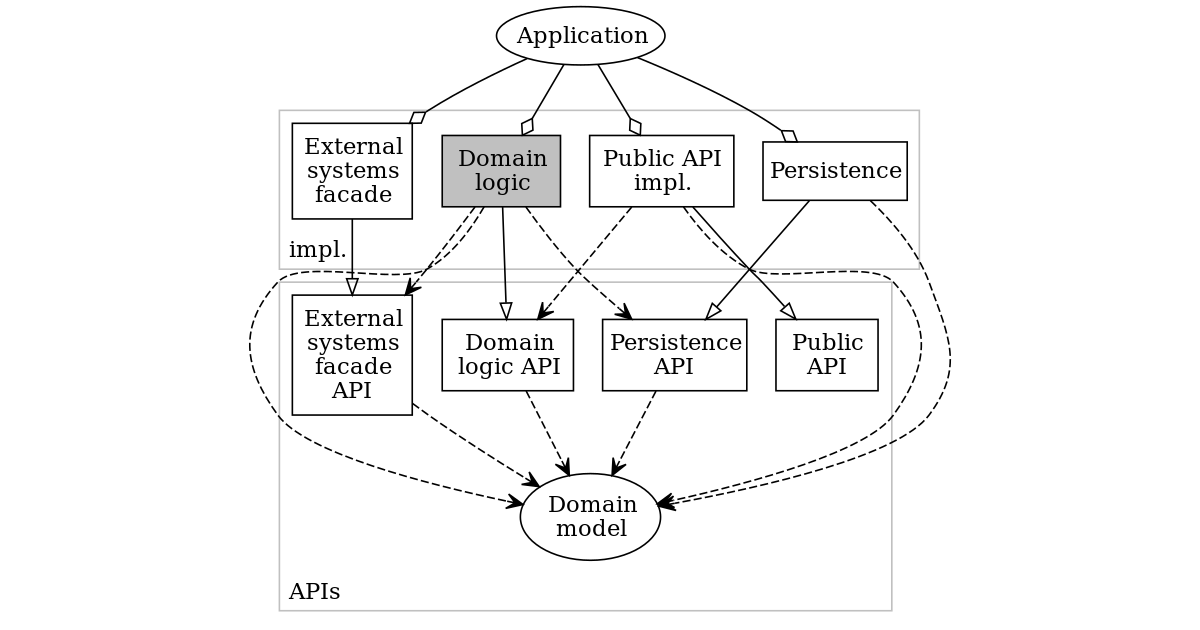

The below diagram illustrates modules and dependencies between them. None of the implementation modules depends on another implementation module. Communication between them happens via API modules. Please note, those dependencies are enforced on the code level. For example, if we’d implement this architecture with java, each module would result in building a separate jar that would only depend on APIs it uses. Assembly of all implementation modules would only happen in the application module.

This approach is also known as a ports-adapters pattern or hexagonal architecture. It’s different from the traditional layered approach where top layers have code dependency on all lower layers. The layered architecture allows one to introduce unwanted dependencies between layers that are not in direct relation (for example view could directly use persistence).

Domain model

Business objects used to communicate with other modules. If DDD is in use then also business logic may be implemented inside business objects as long as it does not create additional dependencies to other modules.

Domain logic

API module is exposing all logic that could be exposed to the external world via public API. It’s a set of interfaces using a domain model to transfer data. Changes in API requires refactoring in public API implementation. It also determines the transaction border.

The implementation module keeps all logic of the system (in case of DDD part of logic can be moved to domain model). It’s the heart of our application (in hexagonal architecture it’s center of a hexagon). It should be kept clean and dependencies on external frameworks or libraries should be minimized.

Persistence

API that encapsulates access to DB or any other persistent store. API should express the fact of persistence (persistence logic) decoupled from the underlying technology.

The implementation module takes care of details on how persistence works in our case. It should be possible to implement persistence using different technologies (relational DB, NoSQL DB, etc) without any changes to the API.

External systems facade

It’s crucial that our application logic does not depend on changes done in external systems. We can achieve it by introducing an external system facade API module. This API should expose only the functionality of the external system that will be used in domain logic (YAGNI).

Implementation of external systems facade module translates (maps) external systems API to external systems API facade used by our domain logic. IT also takes care of caching if applicable. If the external system API will be changed then no changes in our domain logic module will be required. Only the external system facade will have to be adjusted.

In case when you integrate the application with more than one external system it might be a good idea to introduce dedicated facade API and implementation modules for each system. Also in case you consume multiple services from other systems, it might be useful to split one big facade into a couple of smaller facades.

Please note that details of all communication triggered by domain logic to the external world are encapsulated in this module. It includes all kinds of communication synchronous (REST, SOAP) and asynchronous (sending messages to queues).

Public API

The public API module represents what consumers of your application can access. It consists of services interfaces and public models. It should always be backward compatible. Breaking changes should be published as new versions of services while old versions continue to be operational.

Implementation of public API module takes care of mapping public API calls and model into domain API and model. It takes care of security considerations

Application

Assembles all implementation modules into a bundle that can be started. There is no dependency per se between application and implementation logic. Changes in the logic of any implementation modules do not impose changes in the application module.

This module handles anything that is related to deployment and run-time environment. Configurations are stored here. There could be multiple application modules if the application will be running in different environments (for example command-line app, android app, web app).

Additional considerations

Testing

The proposed design makes testing simpler. Each implementation module should be unit and integration tested alone.

Module integration tests

Module integration tests should treat the module as a black box. Tests should use module API as a communication interface and mock any modules or external systems it depends on, for example:

- Domain logic module integration tests call domain logic API and use mocked external systems API and persistence API.

- Persistence logic integration tests call persistence API, prepares data in the underlying store and check to result in that store (for example we one can use an in-memory database).

- External systems module integration test calls external systems API and mocks external system services using the wire format (for example mocked REST endpoints exposed via HTTP with a solution like WireMock).

- Public API module integration tests call public API and use mocked domain logic API.

Application integration tests

Another level of integration testing is required to test the whole application. Those tests prove that all modules work well together.

Application integration tests call public API and use external systems on the wire format. It also uses the database (in memory or real) to provide test data and check results.

Behavior-driven development approach may be used in application integration tests.

Domain module test helpers

It could be beneficial to create an additional test module (or library) that only depends on the domain model and would be used by other modules only in a test context. It would provide reusable test building blocks that speed up test preparation and increase readability. It could contain:

- Domain model test data builders (including predefined common test data sets implemented with builders). See more on this topic in this article.

- Business domain assertions. Those could be written manually, generated automatically (see for example AssertJ assertions generator) or mixed approach (automatically generated and extended manually).

Caching

Caching logic should be encapsulated inside the module so that other modules using it are not aware of it. Most commonly some form of caching is used in the persistence module and in the external systems adapter module. It could also be used in domain module if required (for example we could cache results of resource-demanding calculations).

Transactions

Transactions should be applied to domain logic implementation and not leave its borders. If transaction support is required from other modules (for example external systems or persistence) then it should be exposed by module API.

Security

Security concerns should be applied to public API and it’s implementation and not pollute business domain logic.

Growing bigger?

The above organization of modules fits perfectly for not too big systems and it may be used as final architecture. But when more and more logic is added to the system it results in too much complexity of a single module that may become an issue. The below approach is an evolution from the modularized monolith, through monolith organized into domains ending with microservices that helps to solve huge systems complexity problems.

Domains

Big systems have multiple responsibilities, for example, an e-commerce system is managing customer’s data, handling orders, processing payments, taking care of warehouses, etc. Each of those responsibilities is a different domain and for each domain, we may create a separate set of modules.

Domains should communicate with each other via public API only to keep coupling between them on a minimal level.

Monorepo

Monorepo is an approach when all modules are hosted in a single (mono) code repository. This approach requires less build/deployment effort. All modules are built in a single run. Compatibility issues can be discovered easily in integration tests. With mono-repositories it’s easier to move logic between modules (for example when breaking the monolith into domains or further splitting domains into smaller domains). This is especially useful during the transition from monolith to microservices architecture when it’s not so easy to define breakdown upfront.

With monorepo application can be packaged into a single monolith or into micro-services.

Microservices

Move to the microservice world means we’ll transform each domain into an independent application running as microservice. We could start this transition in monorepo and later on, move to multirepo if there’s a need.

Monolith or microservices?

Usually, we consider monolith or microservice as exclusive alternatives. With proposed architecture, both deployment models could be used in parallel. For example, for local deployment, one could use the monolith application that consists of all domains packaged in a single app. When we need to get scalability then we could deploy exactly the same logic in microservices. The only difference is the configuration and packaging that would need to be prepared for both approaches.

Tooling

The creation of domains/microservices is a repetitive task. It’s useful to have some tooling in place that supports and automates this process. It can be a simple bash script. It could also use some more sophisticated tools like Maven Archetype or Angular Schematics.

Summary and conclusions

The approach described above has both advantages and disadvantages. It should be a conscious decision if we want to use a modularized approach for the whole application, for domains or microservices. It’s not suitable for small or short living projects as there will be benefits only in the long run.

Disadvantages and pitfalls

- Additional code boilerplate required in all modules for entirely new functionality. This slows down the initial delivery time.

- Without supporting, tooling creation of additional project structure may be mundane work.

Advantages

- Fewer dependencies make it easier to understand the system structure.

- Fewer dependencies reduce the risk of changes and make them less time-consuming.

- Fewer dependencies simplify testing. Each module can be tested end-to-end with less mocking.

- The above approach removes dependencies on code level so it’s not possible to accidentally use code that should not be used. This is different from the traditional layered approach where each higher layer (for example view) has access to a lower layer code (for example persistence).